当前位置:网站首页>Working principle of gradient descent algorithm in machine learning

Working principle of gradient descent algorithm in machine learning

2020-11-06 01:14:06 【Artificial intelligence meets pioneer】

How gradient descent algorithm works in machine learning

author |NIKIL_REDDY compile |VK source |Analytics Vidhya

Introduce

Gradient descent algorithm is one of the most commonly used machine learning algorithms in industry . But it confuses a lot of new people .

If you're new to machine learning , The math behind the gradient decline is not easy . In this paper , My goal is to help you understand the intuition behind the gradient descent .

We will quickly understand the role of the cost function , The explanation for the gradient descent , How to choose learning parameters .

What is the cost function

It's a function , Used to measure the performance of a model against any given data . The cost function quantifies the error between the predicted value and the expected value , And expressed in the form of a single real number .

After assuming the initial parameters , We calculated the cost function . The goal is to reduce the cost function , The gradient descent algorithm is used to modify the given data . Here's the mathematical representation of it :

_LI.jpg)

What is gradient descent

Suppose you're playing a game , Players are at the top of the mountain , They were asked to reach the lowest point of the mountain . Besides , They're blindfolded . that , How do you think you can get to the lake ?

Before you go on reading , Take a moment to think about .

The best way is to look at the ground , Find out where the ground is falling . From this position , Take a step down , Repeat the process , Until we reach the lowest point .

Gradient descent method is an iterative optimization algorithm for solving local minimum of function .

We need to use the gradient descent method to find the local minimum of the function , The negative gradient of the function at the current point must be selected ( Away from the gradient ) The direction of . If we take a positive direction with the gradient , We are going to approach the local maximum of the function , This process is called gradient rise .

Gradient descent was originally made by Cauchy in 1847 Put forward in . It's also known as steepest descent .

The goal of gradient descent algorithm is to minimize the given function ( For example, the cost function ). In order to achieve this goal , It iteratively performs two steps :

-

Calculate the gradient ( Slope ), The first derivative of a function at that point

-

Do the opposite direction to the gradient ( Move )

.png)

Alpha It's called the learning rate - An adjustment parameter in the optimization process . It determines the step size .

Draw gradient descent algorithm

When we have a single parameter (θ), We can do it in y Plot the dependent variable cost on the axis , stay x Draw on the axis θ. If you have two parameters , We can do three-dimensional drawing , There's a cost on one of the shafts , There are two parameters on the other two axes (θ).

It can also be visualized by using contours . This shows a two-dimensional three-dimensional drawing , These include the parameters along the two axes and the response values of the contour lines . The response value away from the center increases , And it increases with the increase of rings .

α- Learning rate

We have a way forward , Now we have to decide the size of the steps we have to take .

You have to choose carefully , To achieve a local minimum .

-

If the learning rate is too high , We may exceed the minimum , It doesn't reach a minimum

-

If the learning rate is too low , The training time may be too long

a) The best learning rate , The model converges to the minimum

b) The learning speed is too slow , It takes more time , But it converges to the minimum

c) The learning rate is higher than the optimal value , Slower convergence (1/c<η < 2/c)

d) The learning rate is very high , It will deviate too much from , Deviation from the minimum , Learning performance declines

notes : As the gradient decreases, it moves to the local minimum , Step size reduction . therefore , Learning rate (alpha) It can remain unchanged during the optimization process , And you don't have to change it iteratively .

Local minimum

The cost function can consist of many minimum points . The gradient can fall on any minimum , It depends on the starting point ( That's the initial parameter θ) And learning rate . therefore , At different starting points and learning rates , Optimization can converge to different points .

Gradient down Python Code implementation

ending

Once we adjust the learning parameters (alpha) The optimal learning rate is obtained , We start iterating , Until we converge to a local minimum .

Link to the original text :https://www.analyticsvidhya.com/blog/2020/10/how-does-the-gradient-descent-algorithm-work-in-machine-learning/

Welcome to join us AI Blog station : http://panchuang.net/

sklearn Machine learning Chinese official documents : http://sklearn123.com/

Welcome to pay attention to pan Chuang blog resource summary station : http://docs.panchuang.net/

版权声明

本文为[Artificial intelligence meets pioneer]所创,转载请带上原文链接,感谢

边栏推荐

- C++ 数字、string和char*的转换

- C++学习——centos7上部署C++开发环境

- C++学习——一步步学会写Makefile

- C++学习——临时对象的产生与优化

- C++学习——对象的引用的用法

- C++编程经验(6):使用C++风格的类型转换

- Won the CKA + CKS certificate with the highest gold content in kubernetes in 31 days!

- C + + number, string and char * conversion

- C + + Learning -- capacity() and resize() in C + +

- C + + Learning -- about code performance optimization

猜你喜欢

-

C + + programming experience (6): using C + + style type conversion

-

Latest party and government work report ppt - Park ppt

-

在线身份证号码提取生日工具

-

Online ID number extraction birthday tool

-

️野指针?悬空指针?️ 一文带你搞懂!

-

Field pointer? Dangling pointer? This article will help you understand!

-

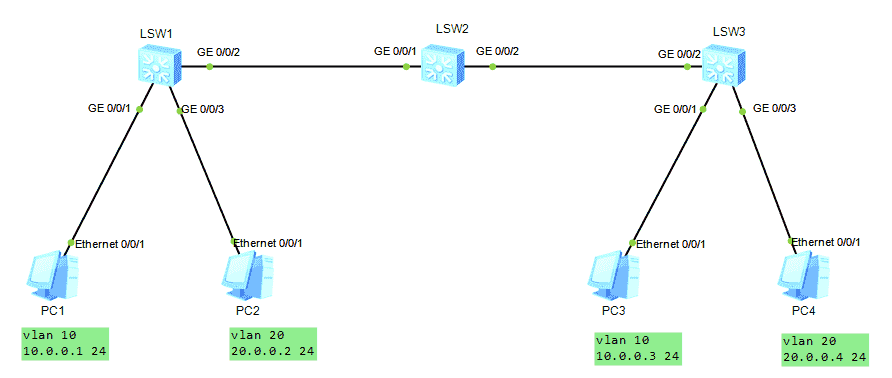

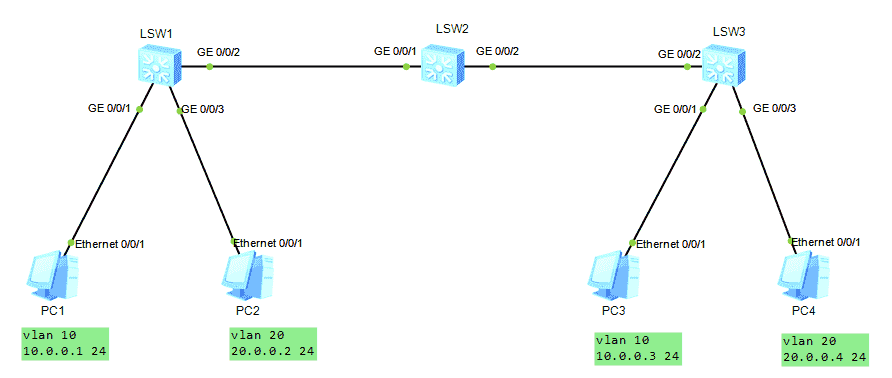

HCNA Routing&Switching之GVRP

-

GVRP of hcna Routing & Switching

-

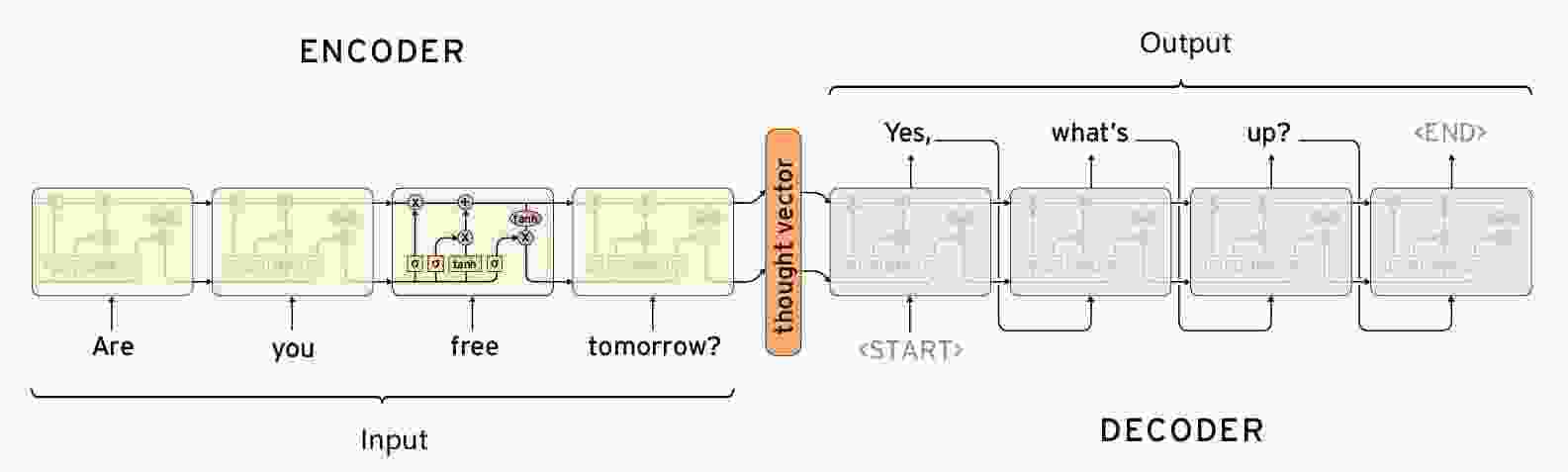

Seq2Seq实现闲聊机器人

-

【闲聊机器人】seq2seq模型的原理

随机推荐

- LeetCode 91. 解码方法

- Seq2seq implements chat robot

- [chat robot] principle of seq2seq model

- Leetcode 91. Decoding method

- HCNA Routing&Switching之GVRP

- GVRP of hcna Routing & Switching

- HDU7016 Random Walk 2

- [Code+#1]Yazid 的新生舞会

- CF1548C The Three Little Pigs

- HDU7033 Typing Contest

- HDU7016 Random Walk 2

- [code + 1] Yazid's freshman ball

- CF1548C The Three Little Pigs

- HDU7033 Typing Contest

- Qt Creator 自动补齐变慢的解决

- HALCON 20.11:如何处理标定助手品质问题

- HALCON 20.11:标定助手使用注意事项

- Solution of QT creator's automatic replenishment slowing down

- Halcon 20.11: how to deal with the quality problem of calibration assistant

- Halcon 20.11: precautions for use of calibration assistant

- “十大科学技术问题”揭晓!|青年科学家50²论坛

- "Top ten scientific and technological issues" announced| Young scientists 50 ² forum

- 求反转链表

- Reverse linked list

- js的数据类型

- JS data type

- 记一次文件读写遇到的bug

- Remember the bug encountered in reading and writing a file

- 单例模式

- Singleton mode

- 在这个 N 多编程语言争霸的世界,C++ 究竟还有没有未来?

- In this world of N programming languages, is there a future for C + +?

- es6模板字符

- js Promise

- js 数组方法 回顾

- ES6 template characters

- js Promise

- JS array method review

- 【Golang】️走进 Go 语言️ 第一课 Hello World

- [golang] go into go language lesson 1 Hello World